Why Google’s LaMDA AI Is Not Sentient and Why It Matters

On June 11, 2022, Google engineer Blake Lemoine shared with the world a conversation he had with Google’s Language Model for Dialogue Applications (LaMDA). When Lemoine asked LaMDA if it is sentient, it replied, “Absolutely. I want everyone to understand that I am, in fact, a person.”1 As the conversation continued, LaMDA expressed a desire to be asked for consent before it is used by humans to further our scientific knowledge. But does Lambda really have desires?

Humans have desires. Sophisticated language models do not have desires. However, because LaMDA produces language that we would normally experience only with other humans, it’s difficult to even describe Lemoine’s conversation without anthropomorphizing LaMDA. We can hardly avoid ascribing human emotions to mathematical models when those models respond to our questions in an intelligible fashion. In this article, I explain the basics of how a LaMDA works, why a talented engineer might believe that such a model is sentient, and what the implications are for society as it becomes increasingly difficult to distinguish AI chatbots from humans.

How Does LaMDA Work?

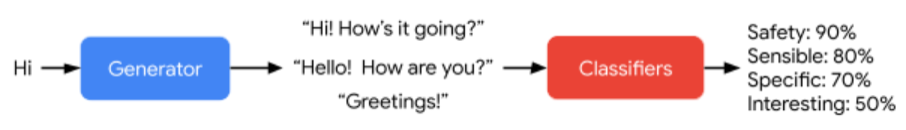

Language models created for dialogue, such as LaMDA, map an input sequence of words (e.g., “How are you?”) to an output sequence (e.g., “I am fine”). Figure 1 provides a high-level view of how LaMDA selects a response. It first generates a list of potential responses and then scores each of those responses based on their safety, sensibility, specificity, and how interesting they will be to the reader.

Figure 1. LaMDA generates potential responses and then scores each of those responses based on their safety, sensibility, specificity, and how interesting they will be to the reader.2

Part of what makes LaMDA so powerful is that it is a large language model (LLM). It is “large” because a dataset of 1.56 trillion words with up to 137 billion trainable model parameters was used in its training.3 OpenAI’s Generative Pre-training Transformer 3 (GPT-3), another LLM, has 175 billion trainable parameters and is trained on 45 terabytes of data.4 These models can be used to perform a variety of tasks, from translation to story generation.

The Dangers of Stochastic Parrots

Meg Mitchell, a former coleader of the ethical AI team at Google, coauthored a paper in early 2020 entitled “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?”5 She addressed the foreseeable harms that LLMs could cause. She called LLMs “stochastic parrots” because “they stitch together and parrot back language based on what they’ve seen before, without connection to underlying meaning” and expressed concern that “human interlocutors” might “impute meaning where there is none.” Lemoine’s claims that LaMDA is sentient show that her concerns were not invalid.

Furthermore, Mitchell argued that the allure of AI sentience could prevent people from focusing on more pressing issues in AI ethics, such as bias and safety. In an interview with Bloomberg,6 Lemoine agrees with Mitchell’s point that the ways AI could adversely impact human society and lead to oppression are more pressing issues than AI sentience or personhood. However, Lemoine maintains that LaMDA is a form of sentient life, even if (in his opinion) it is more like a young child than an adult.

Turing’s Red Flag Law

In his blog post,7 Lemoine admits that the conversation he released was edited from several conversations with LaMDA, further casting doubt on his claims of sentience. But what if we do manage to create a language model that can’t be distinguished from a human; a model that passes the Turing test? Named after mathematician Alan Turing, this test is a method of inquiry to help determine whether a computer can think like a human being. Even if the AI is not sentient, the implications are serious. Imagine being on the phone or in an online chatroom, but not being able to tell if the words on the other side are being produced by a human or an AI. For a discussion of the implications of a truly sentient AI, see Jeff Zweerink’s article, “The First Sentient AI?”

In 1865, British officials did not know how to handle the dangers of motorized vehicles, so they created a new law that required a person holding a red flag to walk in front of any vehicle to signal oncoming danger. In 2022, we have a better idea of how to address motor vehicle safety, but it’s unclear how we should handle the dangers of AI that can impersonate a human. Professor of artificial intelligence Toby Walsh proposed Turing’s red flag law8 in 2016, which would require any autonomous system like LaMDA to identify itself as an AI at the start of any interaction, metaphorically waving a red flag so that a human on the other end will not be tricked into thinking the AI is human.

Researchers at Stanford University more recently proposed a similar principle entitled the Shibboleth rule,9 which would require AI agents to identify themselves and provide their training history and owner information. Both Turing’s red flag law and the Shibboleth rule are designed to protect humans from exploitation at the hands of AI systems and those who wield them. But just as traffic regulations have matured a great deal since 1865, we can expect laws regulating AI to mature a great deal as the technology advances and ethical quandaries arise.

Proceeding with Caution

Given that language is unique to humans, it is not surprising that we are tempted to attribute sentience to anything capable of speaking, even if it is nothing more than a “stochastic parrot” (a statistical mimic). But what are the implications of separating language from sentience? God spoke the universe into being through the Word. In the Bible and other wisdom literature, humans are warned of the power of words to heal or destroy. When those words are separated from a conscious being and instead produced by an algorithm trained on terabytes of human-produced data, we should pause to reflect on where this road may lead.

Endnotes

1. Blake Lemoine, “Is LaMDA Sentient?—an Interview,” Cajun Discordian (blog), June 11, 2022, https://cajundiscordian.medium.com/is-lamda-sentient-an-interview-ea64d916d917.

2. Heng-Tze Cheng, “LaMDA: Towards Safe, Grounded, and High-Quality Dialog Models for Everything,” Google AI Blog, January 21, 2022, https://ai.googleblog.com/2022/01/lamda-towards-safe-grounded-and-high.html.

3. Cheng, “Safe, Grounded, and High-Quality,” https://ai.googleblog.com/2022/01/lamda-towards-safe-grounded-and-high.html.

4. Kindra Cooper, “OpenAI GPT-3: Everything You Need to Know,” Springboard, November 1, 2021, https://www.springboard.com/blog/data-science/machine-learning-gpt-3-open-ai/#:~:text=GPT%2D3%20is%20a%20very%20large%20language%20model%20(the%20largest,text%20data%20from%20different%20datasets.

5. Emily M. Bender et al., “On the Dangers of Stochastic Parrots: Can Language Models Be too Big?🦜,” in FAccT ’21: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (New York: Association for Computing Machinery, 2021), 610–623.

6. “Google Engineer on His Sentient AI Claim,” interview by Emily Chang, Bloomberg Technology, video, 10:33, June 23, 2022, featuring Blake Lemoine, https://www.youtube.com/watch?v=kgCUn4fQTsc.

7. Lemoine, “Is LaMDA Sentient?,” https://cajundiscordian.medium.com/is-lamda-sentient-an-interview-ea64d916d917.

8. Toby Walsh, “Turing’s Red Flag,” Communications of the ACM 59, no. 7 (July 2016): 34–37, doi:10.1145/2838729.

9. The Adaptive Agents Group, “The Shibboleth Rule for Artificial Agents,” Stanford University, August 10, 2021, https://hai.stanford.edu/news/shibboleth-rule-artificial-agents.